Map by Dr. John Snow of London, showing clusters of cholera cases in the 1854 Broad Street cholera outbreak. This was one of the first uses of map-based spatial analysis.

In statistics, spatial analysis or spatial statistics includes any of the formal techniques which study entities using their topological, geometric, or geographic properties. The phrase properly refers to a variety of techniques, many still in their early development, using different analytic approaches and applied in fields as diverse as astronomy, with its studies of the placement of galaxies in the cosmos, to chip fabrication engineering, with its use of 'place and route' algorithms to build complex wiring structures. The phrase is often used in a more restricted sense to describe techniques applied to structures at the human scale, most notably in the analysis of geographic data. The phrase is even sometimes used to refer to a specific technique in a single area of research, for example, to describe geostatistics.

The history of spatial analysis starts with early mapping, surveying and geography at the beginning of history, although the techniques of spatial analysis were not formalized until the later part of the twentieth century. Modern spatial analysis focuses on computer based techniques because of the large amount of data, the power of modern statistical and geographic information science (GIS) software, and the complexity of the computational modeling. Spatial analytic techniques have been developed in geography, biology, epidemiology, sociology, demography, statistics, geographic information science, remote sensing, computer science, mathematics, and scientific modelling.

Complex issues arise in spatial analysis, many of which are neither clearly defined nor completely resolved, but form the basis for current research. The most fundamental of these is the problem of defining the spatial location of the entities being studied. For example, a study on human health could describe the spatial position of humans with a point placed where they live, or with a point located where they work, or by using a line to describe their weekly trips; each choice has dramatic effects on the techniques which can be used for the analysis and on the conclusions which can be obtained. Other issues in spatial analysis include the limitations of mathematical knowledge, the assumptions required by existing statistical techniques, and problems in computer based calculations.

Classification of the techniques of spatial analysis is difficult because of the large number of different fields of research involved, the different fundamental approaches which can be chosen, and the many forms the data can take.

| Contents [hide] - 1 The history of spatial analysis

- 2 Fundamental issues in spatial analysis

- 2.1 Spatial characterization

- 2.2 Spatial dependency or auto-correlation

- 2.3 Scaling

- 2.4 Sampling

- 2.5 Common errors in spatial analysis

- 2.5.1 Length

- 2.5.2 Locational fallacy

- 2.5.3 Atomic fallacy

- 2.5.4 Ecological fallacy

- 2.5.5 Modifiable areal unit problem

- 2.6 Solutions to the fundamental issues

- 3 Types of spatial analysis

- 3.1 Spatial autocorrelation

- 3.2 Spatial interpolation

- 3.3 Spatial regression

- 3.4 Spatial interaction

- 3.5 Simulation and modeling

- 4 Geographic information science and spatial analysis

- 5 See also

- 6 References

- 7 Further reading

- 8 External links

|

[edit] The history of spatial analysis

Spatial analysis can perhaps be considered to have arisen with the early attempts at cartography and surveying but many fields have contributed to its rise in modern form. Biology contributed through botanical studies of global plant distributions and local plant locations, ethological studies of animal movement, landscape ecological studies of vegetation blocks, ecological studies of spatial population dynamics, and the study of biogeography. Epidemiology contributed with early work on disease mapping, notably John Snow's work mapping an outbreak of cholera, with research on mapping the spread of disease and with locational studies for health care delivery. Statistics has contributed greatly through work in spatial statistics. Economics has contributed notably through spatial econometrics. Geographic information system is currently a major contributor due to the importance of geographic software in the modern analytic toolbox. Remote sensing has contributed extensively in morphometric and clustering analysis. Computer science has contributed extensively through the study of algorithms, notably in computational geometry. Mathematics continues to provide the fundamental tools for analysis and to reveal the complexity of the spatial realm, for example, with recent work on fractals and scale invariance. Scientific modelling provides a useful framework for new approaches.

[edit] Fundamental issues in spatial analysis

Spatial analysis confronts many fundamental issues in the definition of its objects of study, in the construction of the analytic operations to be used, in the use of computers for analysis, in the limitations and particularities of the analyses which are known, and in the presentation of analytic results. Many of these issues are active subjects of modern research.

Common errors often arise in spatial analysis, some due to the mathematics of space, some due to the particular ways data are presented spatially, some due to the tools which are available. Census data, because it protects individual privacy by aggregating data into local units, raises a number of statistical issues. Computer software can easily calculate the lengths of the lines which it defines but these may have no inherent meaning in the real world, as was shown for the coastline of Britain.

These problems represent one of the greatest dangers in spatial analysis because of the inherent power of maps as media of presentation. When results are presented as maps, the presentation combines the spatial data which is generally very accurate with analytic results which may be grossly inaccurate. Some of these issues are discussed at length in the book How to Lie with Maps[1]

[edit] Spatial characterization

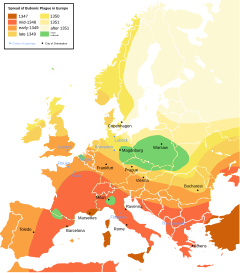

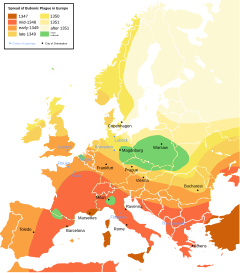

Spread of bubonic plague in medieval Europe. The colors indicate the spatial distribution of plague outbreaks over time. Possibly due to the limitations of printing or for a host of other reasons, the cartographer selected a discrete number of colors to characterize (and simplify) reality.

The definition of the spatial presence of an entity constrains the possible analysis which can be applied to that entity and influences the final conclusions that can be reached. While this property is fundamentally true of all analysis, it is particularly important in spatial analysis because the tools to define and study entities favor specific characterizations of the entities being studied. Statistical techniques favor the spatial definition of objects as points because there are very few statistical techniques which operate directly on line, area, or volume elements. Computer tools favor the spatial definition of objects as homogeneous and separate elements because of the primitive nature of the computational structures available and the ease with which these primitive structures can be created.

There may also be arbitrary effects introduced by the spatial bounds or limits placed on the phenomenon or study area. This occurs since spatial phenomena may be unbounded or have ambiguous transition zones. This creates edge effects from ignoring spatial dependency or interaction outside the study area. It also imposes artificial shapes on the study area that can affect apparent spatial patterns such as the degree of clustering. A possible solution is similar to the sensitivity analysis strategy for the modifiable areal unit problem, or MAUP: change the limits of the study area and compare the results of the analysis under each realization. Another possible solution is to overbound the study area. It is also feasible to eliminate edge effects in spatial modeling and simulation by mapping the region to a boundless object such as a torus or sphere.

[edit] Spatial dependency or auto-correlation

A fundamental concept in geography is that nearby entities often share more similarities than entities which are far apart. This idea is often labeled 'Tobler's first law of geography' and may be summarized as "everything is related to everything else, but near things are more related than distant things" [2].

Spatial dependency is the co-variation of properties within geographic space: characteristics at proximal locations appear to be correlated, either positively or negatively. There are at least three possible explanations. One possibility is there is a simple spatial correlation relationship: whatever is causing an observation in one location also causes similar observations in nearby locations. For example, physical crime rates in nearby areas within a city tend to be similar due to factors such as socio-economic status, amount of policing and the built environment creating the opportunities for that kind of crime: the features that attract one criminal will also attract others. Another possibility is spatial causality: something at a given location directly influences the characteristics of nearby locations. For example, the broken window theory of personal crime suggests that poverty, lack of maintenance and petty physical crime tends to breed more crime of this kind due to the apparent breakdown in order. A third possibility is spatial interaction: the movement of people, goods or information creates apparent relationships between locations. The “journey to crime” theory suggests that criminal activity occurs as a result of accessibility to a criminal’s home, hangout or other key locations in his or her daily activities.

Spatial dependency leads to the spatial autocorrelation problem in statistics since, like temporal autocorrelation, this violates standard statistical techniques that assume independence among observations. For example, regression analyses that do not compensate for spatial dependency can have unstable parameter estimates and yield unreliable significance tests. Spatial regression models (see below) capture these relationships and do not suffer from these weaknesses. It is also appropriate to view spatial dependency as a source of information rather than something to be corrected.

Locational effects also manifest as spatial heterogeneity, or the apparent variation in a process with respect to location in geographic space. Unless a space is uniform and boundless, every location will have some degree of uniqueness relative to the other locations. This affects the spatial dependency relations and therefore the spatial process. Spatial heterogeneity means that overall parameters estimated for the entire system may not adequately describe the process at any given location.

[edit] Scaling

Spatial scale is a persistent issue in spatial analysis.

One of these issues is a simple issue of language. Different fields use "large scale" and "small scale" to mean the opposite things, for example, cartographers referring to the mathematical size of the scale ratio, 1/24000 being 'larger' than 1/100000, while landscape ecologists long referred to the extent of their study areas, with continents being 'larger' than forests.

The more fundamental issue of scale requires ensuring that the conclusion of the analysis does not depend on any arbitrary scale. Landscape ecologists failed to do this for many years and for a long time characterized landscape elements with quantitative metrics which depended on the scale at which they were measured. They eventually developed a series of scale invariant metrics.

[edit] Sampling

Spatial sampling involves determining a limited number of locations in geographic space for faithfully measuring phenomena that are subject to dependency and heterogeneity. Dependency suggests that since one location can predict the value of another location, we do not need observations in both places. But heterogeneity suggests that this relation can change across space, and therefore we cannot trust an observed degree of dependency beyond a region that may be small. Basic spatial sampling schemes include random, clustered and systematic. These basic schemes can be applied at multiple levels in a designated spatial hierarchy (e.g., urban area, city, neighborhood). It is also possible to exploit ancillary data, for example, using property values as a guide in a spatial sampling scheme to measure educational attainment and income. Spatial models such as autocorrelation statistics, regression and interpolation (see below) can also dictate sample design.

[edit] Common errors in spatial analysis

The fundamental issues in spatial analysis lead to numerous problems in analysis including bias, distortion and outright errors in the conclusions reached. These issues are often interlinked but various attempts have been made to separate out particular issues from each other.

[edit] Length

In a paper by Benoit Mandelbrot on the coastline of Britain it was shown that it is inherently nonsensical to discuss certain spatial concepts despite an inherent presumption of the validity of the concept. Lengths in ecology depend directly on the scale at which they are measured and experienced. So while surveyors commonly measure the length of a river, this length only has meaning in the context of the relevance of the measuring technique to the question under study.

Britain measured using a long yardstick | Britain measured using a medium yardstick | Britain measured using a short yardstick |

[edit] Locational fallacy

The locational fallacy refers to error due to the particular spatial characterization chosen for the elements of study, in particular choice of placement for the spatial presence of the element.

Spatial characterizations may be simplistic or even wrong. Studies of humans often reduce the spatial existence of humans to a single point, for instance their home address. This can easily lead to poor analysis, for example, when considering disease transmission which can happen at work or at school and therefore far from the home.

The spatial characterization may implicitly limit the subject of study. For example, the spatial analysis of crime data has recently become popular but these studies can only describe the particular kinds of crime which can be described spatially. This leads to many maps of assault but not to any maps of embezzlement with political consequences in the conceptualization of crime and the design of policies to address the issue.

[edit] Atomic fallacy

This describes errors due to treating elements as separate 'atoms' outside of their spatial context.

[edit] Ecological fallacy

The ecological fallacy describes errors due to performing analyses on aggregate data when trying to reach conclusions on the individual units. It is closely related to the modifiable areal unit problem.

[edit] Modifiable areal unit problem

The modifiable areal unit problem (MAUP) is an issue in the analysis of spatial data arranged in zones, where the conclusion depends on the particular shape or size of the zones used in the analysis.

Spatial analysis and modeling often involves aggregate spatial units such as census tracts or traffic analysis zones. These units may reflect data collection and/or modeling convenience rather than homogeneous, cohesive regions in the real world. The spatial units are therefore arbitrary or modifiable and contain artifacts related to the degree of spatial aggregation or the placement of boundaries.

The problem arises because it is known that results derived from an analysis of these zones depends directly on the zones being studied. It has been shown that the aggregation of point data into zones of different shapes and sizes can lead to opposite conclusions.[3] More detail is available at the modifiable areal unit problem topic entry.

[edit] Solutions to the fundamental issues

[edit] Geographic space

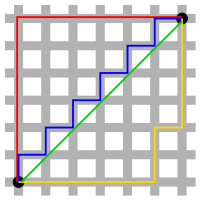

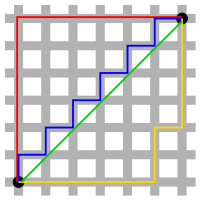

Manhattan distance versus Euclidean distance: The red, blue, and yellow lines have the same length (12) in both Euclidean and taxicab geometry. In Euclidean geometry, the green line has length 6×√2 ≈ 8.48, and is the unique shortest path. In taxicab geometry, the green line's length is still 12, making it no shorter than any other path shown.

A mathematical space exists whenever we have a set of observations and quantitative measures of their attributes. For example, we can represent individuals’ income or years of education within a coordinate system where the location of each individual can be specified with respect to both dimensions. The distances between individuals within this space is a quantitative measure of their differences with respect to income and education. However, in spatial analysis we are concerned with specific types of mathematical spaces, namely, geographic space. In geographic space, the observations correspond to locations in a spatial measurement framework that captures their proximity in the real world. The locations in a spatial measurement framework often represent locations on the surface of the Earth, but this is not strictly necessary. A spatial measurement framework can also capture proximity with respect to, say, interstellar space or within a biological entity such as a liver. The fundamental tenet is Tobler’s First Law of Geography: if the interrelation between entities increases with proximity in the real world, then representation in geographic space and assessment using spatial analysis techniques are appropriate.

The Euclidean distance between locations often represents their proximity, although this is only one possibility. There are an infinite number of distances in addition to Euclidean that can support quantitative analysis. For example, "Manhattan" (or "Taxicab") distances where movement is restricted to paths parallel to the axes can be more meaningful than Euclidean distances in urban settings. In addition to distances, other geographic relationships such as connectivity (e.g., the existence or degree of shared borders) and direction can also influence the relationships among entities. It is also possible to compute minimal cost paths across a cost surface; for example, this can represent proximity among locations when travel must occur across rugged terrain.

[edit] Types of spatial analysis

Spatial data comes in many varieties and it is not easy to arrive at a system of classification that is simultaneously exclusive, exhaustive, imaginative, and satisfying. -- G. Upton & B. Fingelton[4]

[edit] Spatial autocorrelation

Spatial autocorrelation statistics measure and analyze the degree of dependency among observations in a geographic space. Classic spatial autocorrelation statistics include Moran’s I and Geary’s C. These require measuring a spatial weights matrix that reflects the intensity of the geographic relationship between observations in a neighborhood, e.g., the distances between neighbors, the lengths of shared border, or whether they fall into a specified directional class such as “west.” Classic spatial autocorrelation statistics compare the spatial weights to the covariance relationship at pairs of locations. Spatial autocorrelation that is more positive than expected from random indicate the clustering of similar values across geographic space, while significant negative spatial autocorrelation indicates that neighboring values are more dissimilar than expected by chance, suggesting a spatial pattern similar to a chess board.

Spatial autocorrelation statistics such as Moran’s I and Geary’s C are global in the sense that they estimate the overall degree of spatial autocorrelation for a dataset. The possibility of spatial heterogeneity suggests that the estimated degree of autocorrelation may vary significantly across geographic space. Local spatial autocorrelation statistics provide estimates disaggregated to the level of the spatial analysis units, allowing assessment of the dependency relationships across space. G statistics compare neighborhoods to a global average and identify local regions of strong autocorrelation. Local versions of the I and C statistics are also available.

[edit] Spatial interpolation

Spatial interpolation methods estimate the variables at unobserved locations in geographic space based on the values at observed locations. Basic methods include inverse distance weighting: this attenuates the variable with decreasing proximity from the observed location. Kriging is a more sophisticated method that interpolates across space according to a spatial lag relationship that has both systematic and random components. This can accommodate a wide range of spatial relationships for the hidden values between observed locations. Kriging provides optimal estimates given the hypothesized lag relationship, and error estimates can be mapped to determine if spatial patterns exist.

[edit] Spatial regression

Spatial regression methods capture spatial dependency in regression analysis, avoiding statistical problems such as unstable parameters and unreliable significance tests, as well as providing information on spatial relationships among the variables involved. Depending on the specific technique, spatial dependency can enter the regression model as relationships between the independent variables and the dependent, between the dependent variables and a spatial lag of itself, or in the error terms. Geographically weighted regression (GWR) is a local version of spatial regression that generates parameters disaggregated by the spatial units of analysis. This allows assessment of the spatial heterogeneity in the estimated relationships between the independent and dependent variables.

[edit] Spatial interaction

Spatial interaction or "gravity models" estimate the flow of people, material or information between locations in geographic space. Factors can include origin propulsive variables such as the number of commuters in residential areas, destination attractiveness variables such as the amount of office space in employment areas, and proximity relationships between the locations measured in terms such as driving distance or travel time. In addition, the topological, or connective, relationships between areas must be identified, particularly considering the often conflicting relationship between distance and topology; for example, two spatially close neighborhoods may not display any significant interaction if they are separated by a highway. After specifying the functional forms of these relationships, the analyst can estimate model parameters using observed flow data and standard estimation techniques such as ordinary least squares or maximum likelihood. Competing destinations versions of spatial interaction models include the proximity among the destinations (or origins) in addition to the origin-destination proximity; this captures the effects of destination (origin) clustering on flows. Computational methods such as artificial neural networks can also estimate spatial interaction relationships among locations and can handle noisy and qualitative data.

[edit] Simulation and modeling

Spatial interaction models are aggregate and top-down: they specify an overall governing relationship for flow between locations. This characteristic is also shared by urban models such as those based on mathematical programming, flows among economic sectors, or bid-rent theory. An alternative modeling perspective is to represent the system at the highest possible level of disaggregation and study the bottom-up emergence of complex patterns and relationships from behavior and interactions at the individual level. ...

Complex adaptive systems theory as applied to spatial analysis suggests that simple interactions among proximal entities can lead to intricate, persistent and functional spatial entities at aggregate levels. Two fundamentally spatial simulation methods are cellular automata and agent-based modeling. Cellular automata modeling imposes a fixed spatial framework such as grid cells and specifies rules that dictate the state of a cell based on the states of its neighboring cells. As time progresses, spatial patterns emerge as cells change states based on their neighbors; this alters the conditions for future time periods. For example, cells can represent locations in an urban area and their states can be different types of land use. Patterns that can emerge from the simple interactions of local land uses include office districts and urban sprawl. Agent-based modeling uses software entities (agents) that have purposeful behavior (goals) and can react, interact and modify their environment while seeking their objectives. Unlike the cells in cellular automata, agents can be mobile with respect to space. For example, one could model traffic flow and dynamics using agents representing individual vehicles that try to minimize travel time between specified origins and destinations. While pursuing minimal travel times, the agents must avoid collisions with other vehicles also seeking to minimize their travel times. Cellular automata and agent-based modeling are divergent yet complementary modeling strategies. They can be integrated into a common geographic automata system where some agents are fixed while others are mobile.

[edit] Geographic information science and spatial analysis

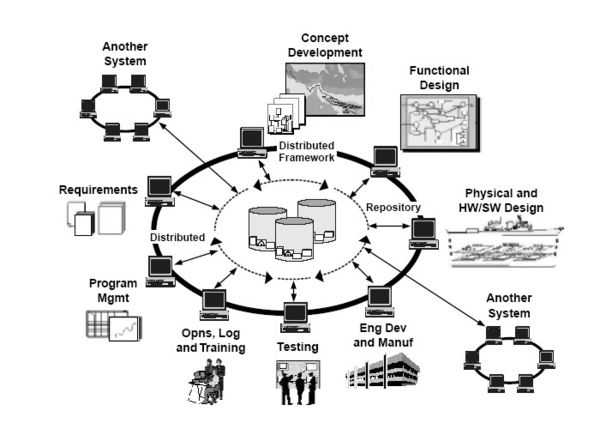

Geographic information systems (GIS) and the underlying geographic information science that advances these technologies have a strong influence on spatial analysis. The increasing ability to capture and handle geographic data means that spatial analysis is occurring within increasingly data-rich environments. Geographic data capture systems include remotely sensed imagery, environmental monitoring systems such as intelligent transportation systems, and location-aware technologies such as mobile devices that can report location in near-real time. GIS provide platforms for managing these data, computing spatial relationships such as distance, connectivity and directional relationships between spatial units, and visualizing both the raw data and spatial analytic results within a cartographic context.

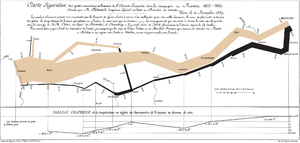

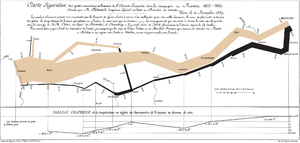

This flow map of Napoleon's ill-fated march on Moscow is an early and celebrated example of geovisualization. It shows the army's direction as it traveled, the places the troops passed through, the size of the army as troops died from hunger and wounds, and the freezing temperatures they experienced.

Geovisualization (GVis) combines scientific visualization with digital cartography to support the exploration and analysis of geographic data and information, including the results of spatial analysis or simulation. GVis leverages the human orientation towards visual information processing in the exploration, analysis and communication of geographic data and information. In contrast with traditional cartography, GVis is typically three or four-dimensional (the latter including time) and user-interactive.

Geographic knowledge discovery (GKD) is the human-centered process of applying efficient computational tools for exploring massive spatial databases. GKD includes geographic data mining, but also encompasses related activities such as data selection, data cleaning and pre-processing, and interpretation of results. GVis can also serve a central role in the GKD process. GKD is based on the premise that massive databases contain interesting (valid, novel, useful and understandable) patterns that standard analytical techniques cannot find. GKD can serve as a hypothesis-generating process for spatial analysis, producing tentative patterns and relationships that should be confirmed using spatial analytical techniques.

Spatial Decision Support Systems (sDSS) take existing spatial data and use a variety of mathematical models to make projections into the future. This allows urban and regional planners to test intervention decisions prior to implementation.

[edit] See also

- Complete spatial randomness

- Geodemographic segmentation

- Visibility analysis

- Suitability analysis

- Geospatial predictive modeling

- Geostatistics

- Extrapolation domain analysis

- Geoinformatics

[edit] References

- ^ Mark Monmonier How to Lie with Maps University of Chicago Press, 1996.

- ^ Tobler, W. (1970). A computer movie simulating urban growth in the Detroit region. Economic Geography, 46, 234-240.

- ^ Longley and Batty Spatial Analysis: Modelling in a GIS Environment pp. 24-25

- ^ Graham J. Upton & Bernard Fingelton: Spatial Data Analysis by Example Volume 1: Point Pattern and Quantitative Data John Wiley & Sons, New York. 1985.

[edit] Further reading

- Abler, R., J. Adams, and P. Gould (1971) Spatial Organization–The Geographer's View of the World, Englewood Cliffs, NJ: Prentice-Hall.

- Anselin, L. (1995) "Local indicators of spatial association – LISA". Geographical Analysis, 27, 93–115.

- Benenson, I. and P. M. Torrens. (2004). Geosimulation: Automata-Based Modeling of Urban Phenomena. Wiley.

- Fotheringham, A. S., C. Brunsdon and M. Charlton (2000) Quantitative Geography: Perspectives on Spatial Data Analysis, Sage.

- Fotheringham, A. S. and M. E. O'Kelly (1989) Spatial Interaction Models: Formulations and Applications, Kluwer Academic

- Fotheringham, A. S. and P. A. Rogerson (1993) "GIS and spatial analytical problems". International Journal of Geographical Information Systems, 7, 3–19.

- Goodchild, M. F. (1987) "A spatial analytical perspective on geographical information systems". International Journal of Geographical Information Systems, 1, 327–44.

- MacEachren, A. M. and D. R. F. Taylor (eds.) (1994) Visualization in Modern Cartography, Pergamon.

- Miller, H. J. (2004) "Tobler's First Law and spatial analysis". Annals of the Association of American Geographers, 94, 284–289.

- Miller, H. J. and J. Han (eds.) (2001) Geographic Data Mining and Knowledge Discovery, Taylor and Francis.

- O'Sullivan, D. and D. Unwin (2002) Geographic Information Analysis, Wiley.

- Parker, D. C., S. M. Manson, M.A. Janssen, M. J. Hoffmann and P. Deadman (2003) "Multi-agent systems for the simulation of land-use and land-cover change: A review". Annals of the Association of American Geographers, 93, 314–337.

- White, R. and G. Engelen (1997) "Cellular automata as the basis of integrated dynamic regional modelling". Environment and Planning B: Planning and Design, 24, 235–246.

[edit] External links

- ICA commission on geospatial analysis and modeling

- An educational resource about spatial statistics and geostatistics

- A comprehensive guide to principles, techniques & software tools

- Social and Spatial Inequalities

- National Center for Geographic Information and Analysis (NCGIA)